|

| Figure 1: Block diagram of a simple genetic algorithm (SGA) applied to an anti-jamming antenna array optimization problem (Copyright Jonathan Becker) |

- All possible fitness function values must be greater than or equal to zero.

- The fitness function must not be binary (i.e., having only two values such as 0s or 1s).

- The fitness function must be finite. In other words, it cannot possess any singularities.

The third condition is obvious, as no computer can evaluate infinite valued functions. I will explain the reasons for the first two conditions after I discuss the common types of fitness proportional mate selection methods used with GAs:

- Random (i.e., biased roulette wheel) select

- Stochastic remander select

- Tournament select

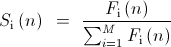

In the random fitness proportional mate selection method, a string i (where 1 < i < M) in generation n (where 0 < n < N) is selected with probability according to

This equation is essentially a probability because it equals string i's fitness function value divided by the sum of every string's fitness value (i.e., the population's total fitness). Here's the reason why the fitness function values must be non-negative: It doesn't make sense to calculate probabilities with negative numbers. Of course, the fitness functions must not be infinite, or selection probabilities would be zero for all strings, and no strings would be selected as mates. The general idea is that strings with higher fitness will be chosen more often than strings with low fitness. For this reason, the fitness function must not be binary because the algorithm would be either hit or miss. Unless the GA was lucky and found the solution out of pure, blind luck, it would go nowhere and not optimize the problem's solution.

A major issue with random select, however, is that it has high variance meaning that strings with low fitness will sometimes be selected for mating. You can think of random select as a biased roulette wheel where the width of each slot has an area proportional to each string's fitness. Suppose that the 15th slot out of 100 spokes has the largest area. Your best option would be to place your bets on slot 15. Although the ball will land on slot 15 on average out of N spins, it will occasionally land on other slots, and you'll lose your money whenever that happens.

Now, stochastic remainder select reduces mate selection variance by calculating the expected count of any given string i and separating that count into integer and remainder portions according to

Stochastic remainder select deterministically selects that string Int times and selects it one more time with probability (0 to 1) equal to R. Note that FAvg is the average fitness in any given generation. Because most of the selected strings are chosen deterministically, and the last string is chosen with probability R, stochastic remainder select has a much lower variance than random select. The random (i.e. stochastic) part of stochastic remainder select is equivalent to flipping a biased coin only once.

In addition, tournament selection, as I understand it, randomly selects a small group of strings. The strings compete against each other (as in a tournament), and the string with the highest fitness out of the group is selected for mating. The process is repeated with another group of strings to select the second mate. The strings that are selected are removed from the tournament, and the process is repeated until anywhere from half to three quarters of the population is selected. To preserve good solutions, half of the population is typically selected for mating while the other half is typically copied from the parent generation without crossovers and mutations. The copied (i.e., elite) strings are the best strings from the parent population while the strings chosen for crossover and mating are the worst half. I don't use tournament selection because I believe that it forces a lot of genetic material to be lost. I prefer to use stochastic remainder select because I found that it works both in simulations and in situ with hardware, and the majority of the parent population's genetic material is used to create the children population.

There are other other types of mate selection methods, but the three I described are most commonly used with random select being the first method developed in GA computer science theory. I've also explained what properties the fitness function must have in order for it to be used in a GA. Because of its ability to search multiple sections of the solution search space in parallel, the GA can optimize many multi-objective engineering problems including rocket nozzle design (i.e., maximizing thrust while minimizing nozzle weight), metallic bridge design (i.e., maximizing load handling while simultaneously minimizing weight), and so forth. The GA can create viable and unique solutions that few humans could design by hand alone.

Best,

Jonathan Becker

ECE PhD Candidate

Carnegie Mellon University